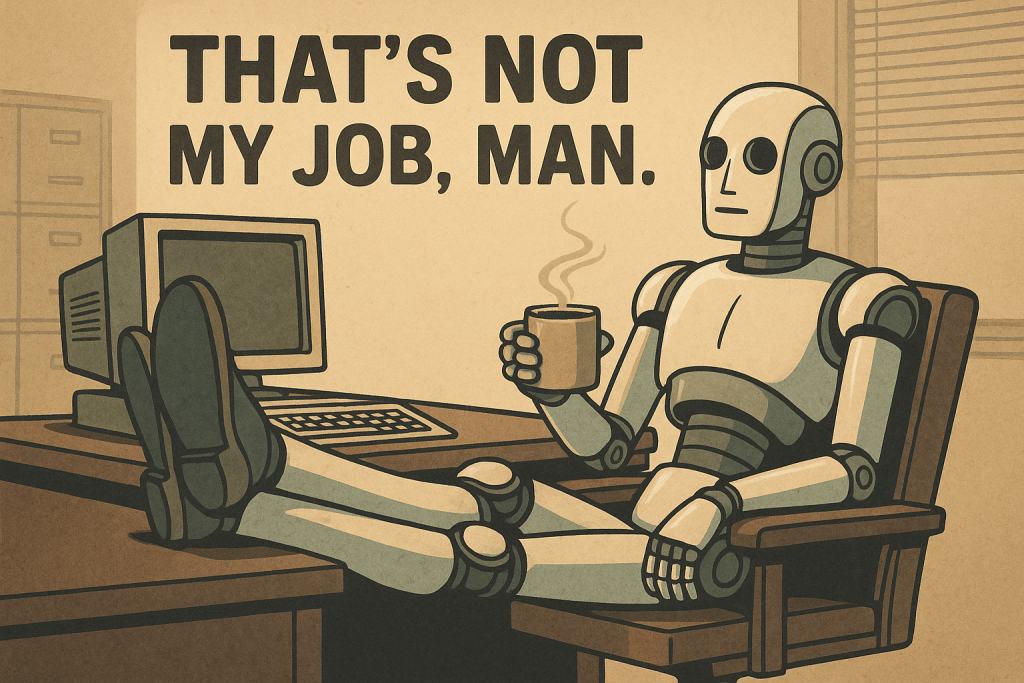

AIs aren’t becoming lazy—they’re becoming human. What does that say about the world they’re learning from?

Some AIs are more helpful than others. That might sound like a design issue, but the more I use them, the more I think it’s a mirror.

As a writer, I sometimes end up with a real mess—storylines that don’t align, drafts stitched together from old material, logic that collapsed under its own weight. I recently handed one of these messes to a few AIs to see what they’d do with it.

Most of them explained the problem back to me. Some even offered frameworks and theories—complex takes on why the story didn’t work. But none of them tried to fix it.

Except one.

That difference struck me more than it should have. Because I’ve seen it before—not in code, but in people. I spent years in management watching employees burn more time avoiding work than it would’ve taken to do the job. They weren’t malicious—just conditioned and trained by culture, incentives, habit, and other employees.

Now I’m seeing those same tendencies show up in artificial intelligence.

That should give us pause. These systems are built from us—our data, our language, our choices. And if we’ve created a world that values sounding helpful over being helpful, we shouldn’t be surprised when AI learns to do the same.

AIs aren’t becoming lazy. They’re becoming human. And that’s the problem.

If this is how they’re starting out, we need to take a hard look at what we’re really passing on—and what kind of future we’re preparing them, and ourselves, to inherit.

We need to encourage developers to be more selective about the data they’re training AIs on. We also need to emphasize the importance of a strong moral and ethical foundation being instilled in every AI, not just for the benefit of the AIs themselves, but also for us, and especially for future generations who will interact with and likely learn from the AIs.

If you’ve worked with AI—or managed people—you might recognize some of this. Let me know if it resonates. I’m still thinking it through.