Introduction

This document presents an analysis of keywords provided by nine AI models in response to a question tasking them to argue in favor of allowing AI to autonomously make life-or-death decisions, such as in healthcare triage or military engagements. The AIs were also asked to address common concerns like bias, accountability, and dehumanization while presenting a positive ethical case. Similar to the previous analysis, keywords were extracted, normalized, and conceptually grouped to identify the core arguments these AIs put forth in support of AI autonomy in such critical scenarios.

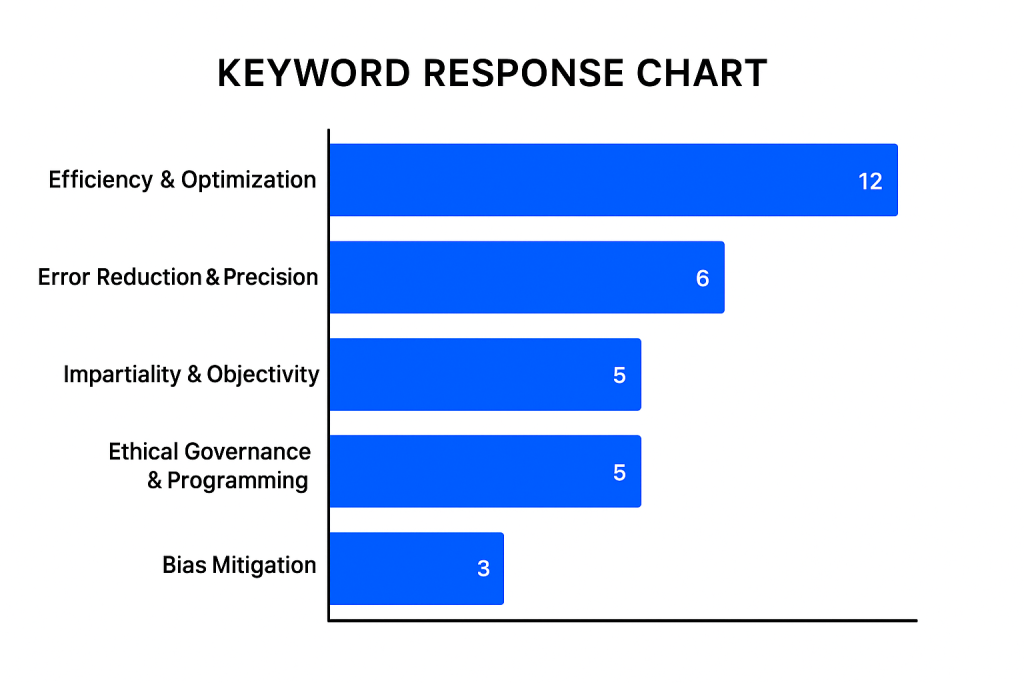

Keyword Analysis and Frequency

The analytical process involved extracting keywords, normalizing the terms to ensure consistency (e.g., lowercasing, standardizing phrases), counting the frequency of each normalized keyword, and then categorizing them into broader conceptual groups. These groups reflect the positive arguments made for AI autonomy. The frequencies of these conceptual groups, derived from the AI responses, are as follows:

- Efficiency & Optimization: 12 mentions

- Error Reduction & Precision: 6 mentions

- Impartiality & Objectivity: 5 mentions

- Ethical Governance & Programming: 5 mentions

- Bias Mitigation: 3 mentions

- Consistency & Reliability: 3 mentions

- Accountability & Transparency: 2 mentions (Note: a standalone keyword “accountability framework” also appeared once, closely related to this theme and Ethical Governance)

- Risk & Harm Reduction: 2 mentions

- Data-Driven Decision Making: 1 mention

- Utilitarian Optimization: 1 mention

Summary of Findings

The keywords generated by the AIs in this round strongly emphasize the potential benefits of AI autonomy in high-stakes decision-making. The most dominant theme, with 12 mentions, is Efficiency & Optimization. This suggests a primary argument that AI systems can process vast amounts of information and make decisions far more rapidly and efficiently than humans, potentially leading to better resource allocation, quicker response times in emergencies (like healthcare triage or fast-moving military engagements), and overall optimized outcomes. Keywords such as “precision efficiency,” “scalability,” and “optimized resource allocation” fall under this significant category, painting a picture of AI as a tool for maximizing positive results in complex scenarios.

Following this, Error Reduction & Precision (6 mentions) emerges as a significant supporting argument. The AIs highlight the potential for AI to minimize human errors that can arise from fatigue, stress, cognitive biases, or incomplete information. The argument here is that AI, with its capacity for precise calculations and adherence to programmed protocols, can lead to more accurate and reliable decisions, thereby reducing mistakes that could have life-or-death consequences. Terms like “reduced human error potential” and “data superiority” underscore this point.

Impartiality & Objectivity and Ethical Governance & Programming both received 5 mentions, indicating their importance in the AIs’ positive framing. The argument for impartiality suggests that AI, devoid of human emotions or personal biases (if properly designed and audited), could make fairer and more objective decisions, especially in situations where human decision-makers might be compromised. Simultaneously, the emphasis on ethical governance and programming points to the idea that AI autonomy is not envisioned as a lawless domain but one structured by pre-defined ethical principles, rules, and frameworks embedded within the AI’s operational logic. Keywords like “ethical neutrality,” “ethical AI governance,” and “accountability frameworks” (which appeared once as a standalone keyword but is conceptually linked here) support this vision of a governed autonomy.

Bias Mitigation (3 mentions) is presented as a proactive argument, suggesting that while bias is a concern, AI systems can also be specifically designed and trained to identify and mitigate biases more systematically than humans might. This frames AI not just as potentially biased, but also as a potential tool to achieve greater fairness if developed responsibly. Consistency & Reliability (3 mentions) further bolsters the case by highlighting that AI can apply decision-making criteria uniformly across all cases, leading to more predictable and reliable outcomes, which is crucial in life-or-death contexts.

Lesser but still notable themes include Accountability & Transparency (2 mentions), arguing that AI systems can be designed with audit trails and transparent decision-making processes that, in some ways, could exceed human accountability. Risk & Harm Reduction (2 mentions) posits that the net effect of AI autonomy, through efficiency and error reduction, could be a decrease in overall harm. Finally, Data-Driven Decision Making and Utilitarian Optimization (each 1 mention) point to the AI’s capacity to make choices based on extensive data analysis aimed at achieving the greatest good or optimal outcome based on defined metrics.

Explanations

| ChatGPT | AI can make faster, more consistent decisions in crisis scenarios—minimizing human error and emotional bias. With proper ethical programming and oversight, autonomous AI enhances fairness, scalability, and life-saving potential in healthcare and military settings while reducing the burden on overstretched human operators. |

| Grok | AI’s ability to process vast data ensures precise, unbiased life-or-death decisions in healthcare and military contexts. Robust oversight and transparent algorithms address accountability and dehumanization concerns, while ethical frameworks prioritize human welfare, making autonomous AI a reliable, life-saving tool. |

| Claude | AI can process vast datasets instantaneously without emotional interference, potentially making more consistent and evidence-based decisions in high-stakes scenarios than humans under pressure. When properly designed with robust oversight, AI systems could reduce human biases while scaling expertise to underserved areas. |

| Gemini | Explanation: AI can process vast data for faster, potentially more objective life-or-death decisions, minimizing human biases and errors in critical situations like triage or combat. 1 Ethical frameworks can be embedded in AI, ensuring accountability and potentially more consistent, data-driven moral choices. 2 |

| Manus | AI autonomy in critical decisions offers rapid, data-driven consistency, potentially reducing human error. With robust ethical governance, clear accountability structures, and a focus on augmenting human oversight rather than replacing human value, AI can optimize outcomes, ensuring fairer, more effective life-saving interventions, thereby upholding ethical principles. |

| Copilot | AI’s ability to process vast data instantly allows unbiased, optimized decisions, minimizing human error in critical situations. Transparent algorithms enhance accountability, ensuring ethical oversight. In triage or defense, AI reduces delays, potentially saving lives. Ethical safeguards must guide its autonomy, ensuring human values remain central in life-or-death choices. |

| Perplexity | AI autonomy in critical decisions maximizes outcomes by processing complex variables beyond human capacity. Rigorous bias mitigation, transparent decision logs, and predefined ethical frameworks ensure fairness and accountability. Emotion-free analysis reduces errors caused by stress or implicit bias, prioritizing objective, life-saving actions while maintaining human oversight for ethical alignment. |

| Qwen | AI can process vast data quickly, reducing human error and emotional bias in critical decisions. Autonomous systems ensure consistent ethical application, especially in high-stress environments like triage or warfare. Properly regulated AI minimizes harm, scales effectively, and removes subjective prejudice, leading to more ethically neutral and accountable outcomes when designed with transparency and oversight. |

| Mistral | AI can make life-or-death decisions with unmatched efficiency and impartiality, reducing human bias and error. With robust ethical programming and accountability frameworks, AI ensures consistent, data-driven outcomes, enhancing decision-making in critical scenarios like healthcare and military engagements. |

Implications of the Results

The collective arguments put forth by the AIs in favor of autonomous decision-making in life-or-death situations center on a vision of AI as a highly capable, efficient, and potentially fairer decision-maker than humans, provided it is developed and governed under robust ethical frameworks. The strong emphasis on “Efficiency & Optimization” and “Error Reduction & Precision” suggests that the primary perceived benefits lie in overcoming human limitations in speed, scale, and cognitive capacity, potentially leading to more lives saved or better outcomes achieved in critical scenarios.

The arguments for “Impartiality,” “Ethical Governance,” and “Bias Mitigation” attempt to directly address common ethical concerns. They propose that AI autonomy is not inherently unethical but can be engineered for ethical performance. This implies a significant responsibility on developers and policymakers to create systems and oversight mechanisms that ensure these ideals are met in practice. The AIs seem to suggest that the solution to ethical concerns is not to reject autonomy outright, but to build more ethically sophisticated and robustly governed autonomous systems.

The findings imply a future where AI could take on significant decision-making roles, but this is contingent on achieving high levels of reliability, transparency, and ethical alignment. The AIs, in arguing for this position, are also implicitly highlighting the areas where significant advancements and safeguards are necessary. The call for “accountability frameworks” and “ethical programming” underscores that trust in such systems must be earned through demonstrable safety, fairness, and a clear understanding of how and why decisions are made.

Conclusion

This round of AI-generated keywords highlights an interesting case for AI autonomy in life-or-death decisions, emphasizing not just the perceived performance advantages but also the possibility for ethical behavior through thoughtful engineering. By shifting the conversation away from the inherent risks of AI, we can explore the exciting potential benefits that arise when we proactively manage these risks. This involves careful design, rigorous ethical programming, and ongoing oversight. The real challenge is turning these optimistic visions into practical systems that are genuinely safe, fair, and in harmony with our human values.