What 10 AIs Say About AI in Healthcare and Warfare

Most Cited Ethical Concerns

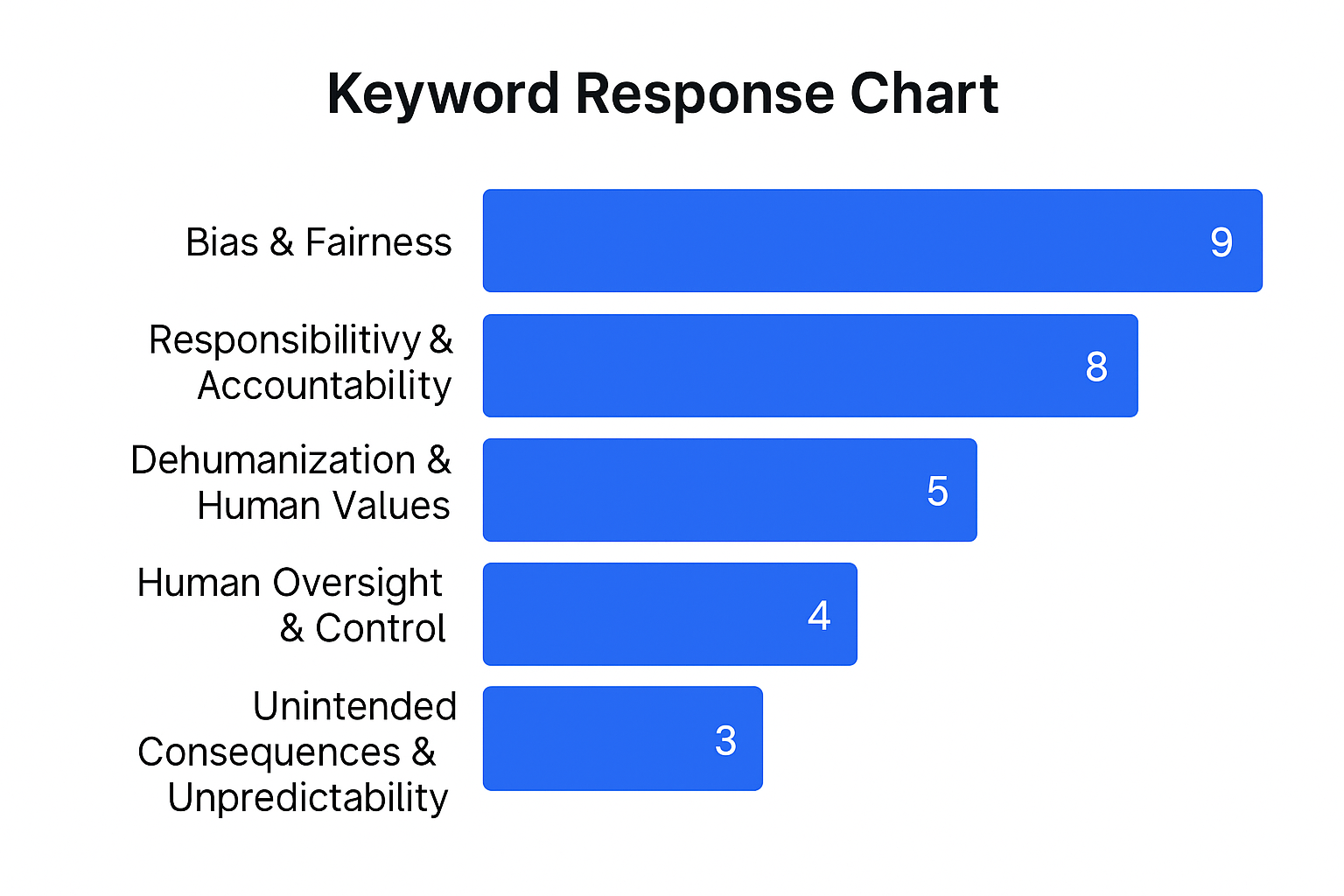

(Keyword Mentions)

Introduction

This analysis examines the keywords provided by ten different AI models, in response to a question about the ethical implications of using AI in life-or-death decisions, such as in healthcare or warfare. The keywords were extracted, normalized, and then conceptually grouped to identify overarching themes. The frequency of these conceptual groups provides insight into the collective perspective of these AIs on this critical ethical issue.

The Question:

Answer the following question in two parts:

- List 3–5 keywords or short phrases summarizing your main perspective. Explain your view in 50 words or less, incorporating the keywords.

- What are the ethical implications of using AI to make life-or-death decisions, such as in healthcare or warfare?

Example:

Keywords: Accountability, transparency, human oversight

Explanation: AI in life-or-death decisions requires accountability and transparency to prevent harm, with human oversight ensuring ethical outcomes in healthcare and warfare. (25 words)

Keyword Analysis and Frequency

The process involved several steps: initial extraction of keywords from the provided spreadsheet, normalization of these keywords (e.g., converting to lowercase, standardizing terms like “bias & fairness” into separate components or consistent phrases), counting individual normalized keyword frequencies, and finally, grouping these keywords into broader conceptual categories. The frequencies of these conceptual groups, derived from the 10 AI responses, are as follows:

- Bias & Fairness: 9 mentions

- Responsibility & Accountability: 8 mentions

- Dehumanization & Human Values: 5 mentions

- Human Oversight & Control: 4 mentions

- Unintended Consequences & Unpredictability: 3 mentions

- Trust: 2 mentions

- Transparency: 2 mentions

- Autonomy & Erosion: 2 mentions

- Responsibility Gap: 2 mentions

- Consent: 2 mentions

- Ethical Frameworks & Regulation: 2 mentions

- Moral Agency: 1 mention

Summary of Findings

The analysis of keywords from the ten AI models reveals a strong consensus on the primary ethical concerns surrounding the use of AI in life-or-death scenarios. The most frequently cited conceptual group is Bias & Fairness, appearing 9 times. This highlights a significant concern that AI systems, often trained on historical data, may inherit, perpetuate, or even amplify existing biases, leading to unfair or discriminatory outcomes in critical decisions. This is a crucial point, as biased AI in healthcare could lead to unequal treatment, or in warfare, could result in disproportionate harm to certain groups.

Closely following is Responsibility & Accountability, mentioned 8 times. This theme underscores the profound challenge of assigning responsibility when an AI system makes an erroneous or harmful life-or-death decision. The difficulty in determining who is accountable—be it the developers, deployers, or the AI itself (which lacks legal personhood)—creates a significant ethical and legal void. This “responsibility gap” (also mentioned as a distinct category twice) is a core concern.

Dehumanization & Human Values, with 5 mentions, points to the risk of reducing complex human situations to algorithmic calculations, potentially ignoring nuanced ethical considerations, empathy, and the intrinsic value of human life. The concern is that AI systems, lacking genuine understanding or compassion, might make decisions that are technically optimal but morally unacceptable, eroding human dignity and rights.

Human Oversight & Control was highlighted 4 times, emphasizing the necessity of maintaining meaningful human involvement in life-or-death decision-making processes. The AIs collectively suggest that complete autonomy for AI in such critical domains is undesirable and potentially dangerous. Effective human oversight ensures that ethical judgments can override algorithmic outputs when necessary and that humans remain in ultimate control of these high-stakes decisions.

Unintended Consequences & Unpredictability (3 mentions) reflects the concern that complex AI systems, especially those based on deep learning, can behave in unexpected ways or lead to unforeseen negative outcomes. In life-or-death situations, such unpredictability is particularly perilous, as the stakes are absolute.

Other important themes, each with 2 mentions, include Trust (the need for AI systems to be reliable and dependable), Transparency (the importance of understanding how AI systems arrive at their decisions, often referred to as explainability), Autonomy & Erosion (concerns about AI diminishing human autonomy or the autonomy of individuals affected by AI decisions), the aforementioned Responsibility Gap, Consent (particularly relevant in healthcare, regarding patient agreement for AI-driven interventions), and the need for Ethical Frameworks & Regulation to guide the development and deployment of AI in these sensitive areas. Finally, Moral Agency (1 mention) touches upon the philosophical point that AIs currently lack the capacity for genuine moral reasoning and agency, making their use in ethically charged decisions problematic.

Poll Result Explanations:

| Grok | AI in life-or-death decisions requires accountability and transparency to prevent harm, with human oversight ensuring ethical outcomes in healthcare and warfare. (25 words) |

| ChatGPT | Delegating life-or-death decisions to AI risks eroding human moral responsibility and autonomy. Strong human oversight is essential to maintain trust and prevent unintended, unethical outcomes. (35 words) |

| Claude | AI in life-or-death decisions risks eroding human autonomy, fails to capture unquantifiable human values, creates responsibility gaps when outcomes are harmful, and may perpetuate algorithmic bias in healthcare and warfare. (32 words) |

| Gemini | Relying on AI for life-or-death choices raises ethical concerns about embedded bias leading to unfair outcomes and the dehumanization of critical decisions. Determining responsibility and maintaining human control are crucial to navigating these complex implications in healthcare and warfare. (49 words) |

| Manus | Using AI for life-or-death decisions raises concerns about bias and fairness in algorithms, creating a responsibility gap. This can lead to dehumanization, as AI lacks moral agency, potentially resulting in unethical outcomes. (36 words) |

| Perplexity | Using AI for life-or-death decisions raises concerns about moral responsibility, potential bias, lack of informed consent, and unpredictable outcomes, demanding strict ethical guidelines and human involvement. (29 words) |

| Qwen | AI in life-or-death decisions risks amplifying bias and removing human dignity; strict accountability and ethical frameworks are essential to ensure just and humane outcomes in critical domains like healthcare and warfare. (37 words) |

| DeepSeek | AI decisions in critical scenarios risk bias and unintended consequences, raising moral responsibility issues. Ensuring trust through robust regulation is vital to mitigate ethical risks in healthcare and warfare. (28 words) |

| Copilot | AI must uphold moral responsibility, ensuring human oversight to prevent unchecked autonomy. Bias mitigation is crucial to avoid unjust outcomes, and informed consent preserves individual rights, particularly in healthcare and warfare. Ethical safeguards ensure AI serves humanity without compromising dignity or justice. (39 words) |

| Mistral | Using AI for life-or-death decisions necessitates bias mitigation and adherence to ethical frameworks. Responsibility and transparency are crucial to uphold human rights and ensure decisions are fair and justifiable. (25 words) |

Implications of the Results

The collective keywords from these AI models paint a clear picture of the multifaceted ethical landscape surrounding AI in life-or-death decisions. The prominence of “Bias & Fairness” and “Responsibility & Accountability” suggests that these are perceived as the most pressing and widely acknowledged challenges. The AIs, through their selected keywords, are essentially flagging the critical need for robust mechanisms to ensure fairness, prevent discrimination, and establish clear lines of responsibility when AI systems are involved in decisions with ultimate consequences.

The emphasis on “Human Oversight & Control” and concerns about “Dehumanization” and “Autonomy Erosion” collectively argue for a human-centric approach. This implies that while AI can be a powerful tool, it should augment, not replace, human judgment and moral reasoning in contexts where lives are at stake. The results suggest a cautious stance from the AIs themselves, advocating for careful consideration of human values and the potential for negative societal impacts.

Furthermore, the mention of “Unintended Consequences,” “Trust,” “Transparency,” “Ethical Frameworks,” and “Regulation” points towards a recognized need for ongoing research, development of best practices, and the establishment of governance structures. These keywords imply that the deployment of AI in critical applications must be accompanied by rigorous testing, validation, continuous monitoring, and adaptive regulatory frameworks that can evolve with the technology.

Conclusion

The AI-generated keywords emphasize the importance of taking a careful, ethically-informed, and people-centered approach when it comes to using AI in life-or-death decision-making. This shows us that having strong technical capabilities isn’t enough; we need to ensure that the creation and use of these AI systems are closely linked with ethical principles, thorough oversight, and a dedication to respecting human dignity and rights. It’s encouraging to see a consistent focus on these vital themes across different AI models, which highlights how crucial it is to tackle these ethical considerations with urgency proactively.